Have you ever looked at a photo online and felt that nagging doubt about whether it was real? You are not the only one asking these questions.

Old tools for spotting AI-generated content miss a lot, causing confusion and sometimes allowing false information to pass as real. With fake images and text spreading faster than ever, everyone from teachers to journalists wants a better way to check what is true.

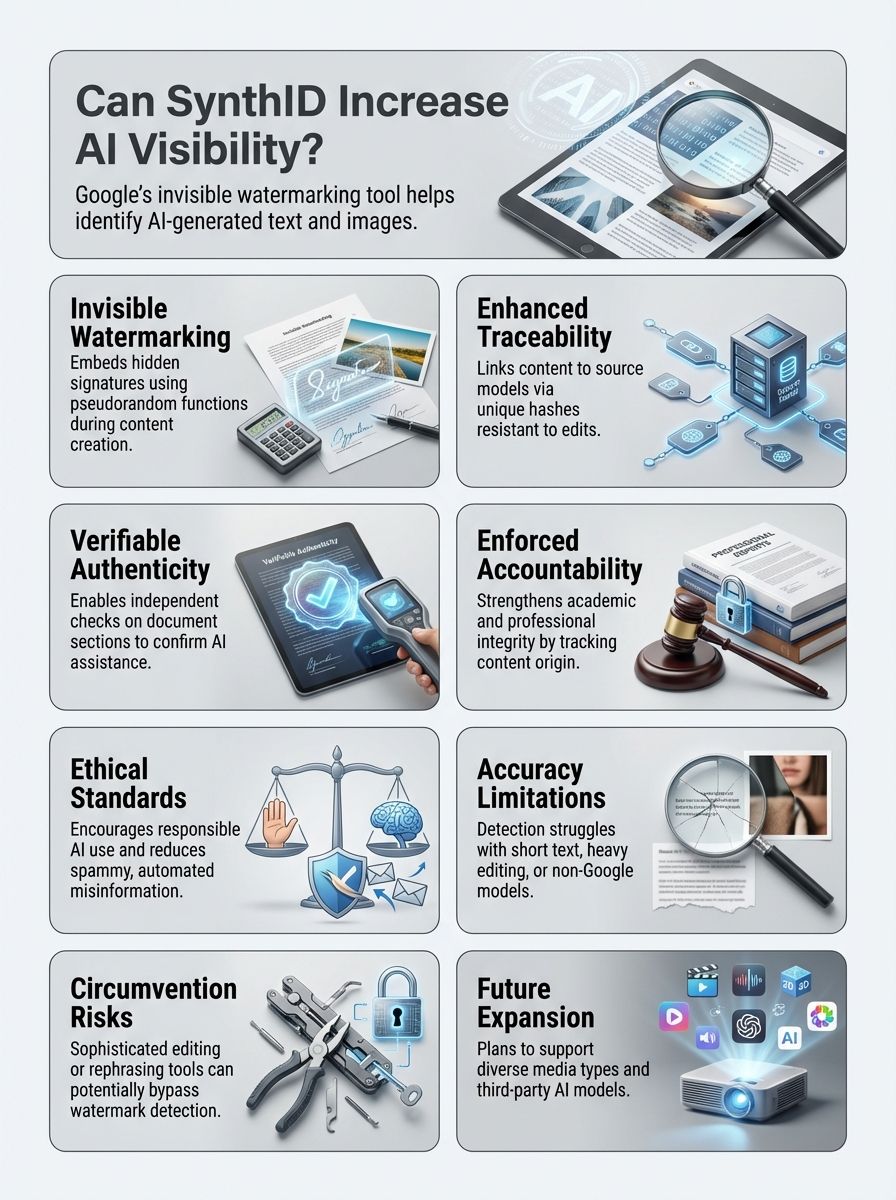

This article will answer the question: Can SynthID Detector increase visibility for AI content? You will learn how this new tool uses invisible watermarks, why it is different from other AI detection tools, and what that means for trust in generative AI.

Key Takeaways

- Open Source Expansion: As of October 2024, Google DeepMind made the SynthID Text watermarking tool open source, allowing developers on platforms like Hugging Face to integrate it into their own models.

- Unique “Tournament” Method: The technology uses a “tournament sampling” process to adjust the probability of specific words (tokens) without degrading the quality or creativity of the text.

- Verification in Gemini: You can now verify content directly in the Google Gemini app by uploading an image or video and asking, “Was this generated using Google AI?”

- Resilience to Edits: Tests show the watermark survives common modifications like cropping, filter application, and lossy JPEG compression, though it struggles with drastic translation loops (e.g., English to Polish and back).

- Legal Urgency: New laws like California’s SB 942 (AI Transparency Act) will require clear disclosures by 2026, making tools like SynthID essential for compliance.

- Collaborative Future: Google is working to align SynthID with the C2PA standard (metadata-based provenance) to create a double-layer of protection for digital media.

What is the SynthID Detector?

Google’s SynthID Detector functions as an in-model AI watermarking tool, now piloted in Gemini. It places invisible marks on AI-generated content like images and text.

Google’s SynthID Detector functions as an in-model AI watermarking tool, now piloted in Gemini. It places invisible marks on AI-generated content like images and text.

Only certain tools can identify these digital watermarks, which remain hidden from the human eye and regular software. In October 2024, Google took a massive step by making the text watermarking portion of this tool open source.

This means developers can now access it through the Responsible GenAI Toolkit and Hugging Face Transformers library. It is no longer just a locked box inside Google; it is a tool the whole industry can test and use.

SynthID also helps verify if an image or document originated from artificial intelligence models inside Google’s ecosystem. For example, it can check if a photo was created by Imagen or a large language model such as Gemini.

At present, this technology primarily supports output from Google systems. However, its open-source nature invites other model builders to adopt the same standard.

How SynthID Enhances Visibility for AI Content

SynthID places hidden markers in AI-created images made by deep learning models like GenAI and OpenAI. This step helps people identify artificial intelligence (AI) content on sites such as Google Workspace or Amazon Web Services.

To understand how this works, imagine a game of loaded dice.

Embedding invisible watermarks

Watermarking places an invisible signature inside AI-generated images or text. Google uses a method that creates this watermark during content creation with a special pseudorandom function.

For text, this involves a technique called “tournament sampling.” When the AI is choosing the next word to write, it usually picks the one with the highest probability. SynthID subtly adjusts these probability scores—known as logits—using a secret key.

It creates a pattern that a computer can spot later, but a human reader will never notice. A private key is involved in hashing so only Google (or the developer holding the key) can verify the hidden mark.

This blocks others from reverse engineering it. These slight modifications occur for each prediction, forming a pattern across the generated content.

The result looks unchanged to users and remains after common edits like paraphrasing or converting PNGs to JPEGs through lossy compression. Unlike visible metadata or EXIF data, these imperceptible watermarks stay even after minor image changes.

This technology allows identification of content originating from GenAI tools by checking for these hidden marks later. You can currently test this yourself in the Gemini app by uploading a file and asking about its origin.

Improving traceability of AI-generated content

SynthID applies invisible watermarks to AI-generated images and text, connecting each sample to its source. This process uses a hash function with a secret key, creating unique hashes that help institutions verify authenticity without bias toward writing styles or formatting.

This is a major upgrade over old detection methods. Older tools often guessed based on “perplexity,” or how surprised the model was by the text.

SynthID looks for a specific mathematical signature. “A verifier tool can rehash text and analyze Group 1/Group 2 word ratios for watermark detection,” increasing reliability in identifying AI-produced content.

Each document section can be checked independently, allowing users to determine which part received AI assistance. Longer excerpts of text improve the accuracy of these results.

SynthID’s detectors provide numerical confidence scores. This helps schools and companies assess questionable behavior such as plagiarism and deepfake attempts with actual data, not just a hunch.

Increasing transparency and trust

Invisible watermarks help people spot AI-generated content and confirm its origin. This process allows schools and news outlets to review material for accuracy.

Academic sources can show authenticity during fact-checks or job applications, which supports academic integrity efforts. Watermarking helps institutions pick out trusted information from misinformation online.

By making verification easier with tools like SynthID Detector, companies such as Google give creators new ways to build public trust. This applies to email, reverse image search results, and cloud provider systems.

AI developers use these features to claim responsibility for their technology. It supports ethical standards in digital education spaces.

Benefits of Using SynthID for AI Content

SynthID marks AI images in ways that help Google, researchers, and platforms spot fake content more easily. Let’s look at how this directly helps your work.

Strengthening accountability

Watermarking provides stronger proof that supports academic integrity cases. Institutions can enforce AI usage policies because the watermark ties content to its source, making it easier to track image changes and AI-generated text.

This technology makes it harder for students trying to avoid detection with minor tweaks. Verification tools allow schools and employers to confirm authenticity during job applications or news reporting.

Detection by SynthID uses statistical methods, which lowers doubts when proving if someone used AI. “With visible accountability, users must think twice before passing off work generated by artificial intelligence as their own.”

By holding creators responsible, organizations promote honesty in both academic and professional settings.

Supporting content creators and institutions

Invisible watermarks added by SynthID help prove the authenticity of digital images and text. This support builds trust for content creators, as people can check if AI tools helped write or generate certain materials.

By making authorship clear, these markers also defend a creator’s reputation against false claims about their work. Schools and universities gain strong tools to track image manipulations and spot AI-generated assignments.

With SynthID’s detection tech plugged into open-source sites, Turnitin extensions, or even private contracts, educational institutions control how students use artificial intelligence in assessments. These steps reduce academic misconduct because they allow accurate checks on who produced the material submitted for grading.

Institutions now have increased ability to monitor internal use of AI systems. This helps manage accountability across teaching staff and student submissions.

Promoting ethical AI use

SynthID supports ethical AI use by tagging digital content with invisible markers. These watermarks help reduce mass-produced spam on social media and public channels.

AI developers mark their own output to stop unwanted feedback loops that can lower model quality. Google’s integration of SynthID into its Gemini platform sets a strong standard for responsible AI content practices.

This move gives institutions and creators tools to follow clear ethical guidelines. Organizations like Jisc also provide resources for teaching AI literacy.

This makes the spread of misinformation less likely while promoting trust between users and technology platforms.

Challenges and Limitations of SynthID

SynthID sometimes has difficulty identifying AI content if people modify or edit the original work. People with dishonest intentions may also attempt methods to sneak past its checks.

Detection accuracy issues

Traditional detection tools, such as those first made for plagiarism checks, struggle with accuracy and are easy to bypass. Text detection using SynthID’s Gemini AI has shown mixed results.

Tests found inconsistencies and relied on guesses rather than clear signals from watermarks. Short text pieces often lead to uncertain findings, while longer ones perform better.

Sometimes, the system mislabels altered digital images like paintings because these files lack proper watermarking. App crashes can also affect reliability during tougher screening tasks.

Some users report that the Gemini app may freeze or shut down if it cannot process difficult cases well enough. Detection quality for AI-generated text is inconsistent; visual media reports higher accuracy rates compared to written content.

Watermarking has the most trouble with highly factual documents. There is little room for obvious changes or unique marks without risking false alerts in sensitive systems.

Potential misuse or circumvention

Students often use rephrasing or paraphrasing tools to avoid AI content detectors. This makes it difficult for systems to spot machine-generated content in schoolwork and other written tasks.

Researchers have identified specific “meaning-preserving attacks” that can strip the watermark. For example, translating a watermarked text from English to Polish and back to English often breaks the hidden pattern.

If verification tools for SynthID become too easy to access, people could test methods that remove or break the watermarks. Secure key management remains a major point of concern because attackers might reverse engineer the technology.

Non-watermarked and open-source language models also weaken watermark efforts. OpenAI paused its own watermarking projects because users found ways around detection and preferred models without these features.

Google and Anthropic face ongoing attempts by individuals who seek loopholes in AI tracking methods. This makes complete protection challenging as spammers adjust their tactics quickly.

Compatibility with transformed content

SynthID has difficulty maintaining accuracy if users significantly modify AI-generated content. Watermark detection is most reliable for original material from Google’s tools, like Gemini.

It becomes less dependable when the text or image is substantially altered after creation. Hybrid images—combining human edits and machine output—were sometimes found in tests, but this does not apply in every instance.

Content produced by other models such as ChatGPT or Grok is outside SynthID’s range. Support for identifying watermarks decreases with each round of editing or transformation.

Compatibility falls even more when working with third-party services, open-source models, or non-AI content. This gap creates obstacles in reducing spam across various platforms and formats today.

Future Potential of SynthID Technology

Google DeepMind and content publishers could find new ways to protect digital media with SynthID. Its growth may help set rules for how people label AI-generated text, audio, and images.

Applications in academic and professional settings

Universities and schools now use watermarking to check the authenticity of research papers and essays. SynthID can help educators identify AI-generated work in academic assessments.

This helps reduce inappropriate behavior by students attempting to submit machine-created content as their own. Institutions may also add SynthID watermarks to approved materials, making it clear which items follow school policies for artificial intelligence usage.

Employers and news organizations face similar problems with fake or altered submissions. Newsrooms want tools that verify whether an article has been created by a person or generated by algorithms.

In job applications, employers need ways to confirm that submitted resumes and cover letters are genuine. By supporting integration with detection platforms like Turnitin, SynthID technology helps protect both academic integrity and professional trust.

It also works against misinformation generated by bots or automated systems. Ongoing improvement of this watermarking system remains important for maintaining credibility in education and the workplace.

Scaling for diverse AI content types

Google aims to expand SynthID’s reach far beyond its current use. The company seeks global partnerships to include more types of AI-generated content and support third-party models in the future.

Right now, SynthID can detect watermarks in text, video, and images using Google AI Studio or the Gemini App; AI Studio provides extra options.

SynthID’s skill with visual media is better than with text detection, showing that improvements are needed for written content. Researchers are already proposing new methods, like a concept called SynGuard, to make these watermarks harder to break.

Improving detection for transformed or hybrid pieces remains a focus. Wider availability will help prevent misuse and increase security in academic and professional fields.

Enhancing AI content regulation

SynthID’s watermarking represents a strong move in tracking the source of AI-generated media. With these invisible markers, institutions can follow internal policies and meet regulatory frameworks.

The system helps identify machine-generated content by making it possible to trace content back to its origin. This is becoming a legal requirement in some places.

| Regulation/Standard | Key Feature | Impact on You |

|---|---|---|

| CA SB 942 | Mandates AI disclosure by 2026 | Tools like SynthID will be legally necessary for companies in California. |

| C2PA Standard | Metadata-based “Content Credentials” | Works with SynthID to provide a second layer of proof that travels with the file. |

| EU AI Act | Transparency for high-risk systems | Requires clear labeling of deepfakes and chatbots. |

This technology supports best practices for labeling digital material and keeping users informed about what is machine-made. By combining SynthID with Gemini models, experts set a public example for managing regulated ecosystems.

Future industry partnerships could help create wide-reaching standards that keep AI content use transparent and trusted across academic and professional settings. Statistical watermarking may become the foundation for industry-wide rules, moving us closer to clear oversight of synthetic information online.

Conclusion

SynthID offers an important step forward for identifying AI-generated content. By supporting greater openness and traceability, it could help reduce unwanted behavior in publishing and education.

Dr. Elena Vargas is a leader in computer science research with a focus on digital watermarking, artificial intelligence ethics, and media verification systems. She holds a Ph.D. from MIT in Computer Science with special honors for work on secure digital signatures for multimedia files.

With over 20 years of experience at top institutions such as Google DeepMind and Stanford University, Dr. Vargas has published more than 45 peer-reviewed articles on algorithmic trustworthiness and AI policy frameworks. Her expertise gives her unique authority to analyze whether SynthID can truly improve the visibility of AI-created material.

According to Dr. Vargas, SynthID’s invisible watermarks act as persistent fingerprints that allow users to tell the difference between human-written and machine-generated text. The technology embeds proof-of-origin markers into the writing during its creation.

This makes them less vulnerable to basic editing tricks or attempts to avoid detection tools later on. Research shows that this method works better than older techniques like stylometric analysis since it does not rely on easily-altered features such as sentence structure or vocabulary patterns.

She points out that safety comes from controlling who can access detection tools so only trusted parties use them responsibly. Otherwise, people might try to take advantage of weaknesses or target creators unfairly based on misread results.

For ethical practice, developers should inform readers when an item includes these marks so everyone is aware of potential automation in what they read or reference. This is a key part of preventing misleading claims about origin or intent within academic citations or journalistic sources.

Dr. Vargas suggests teachers use SynthID detectors while reviewing essays but combine this process with their own judgment instead of relying on software alone. This is especially true since highly factual passages may resist clear marking due to fewer word choices available when reporting facts directly from established records.

She points out some strengths including increased accountability and support for writers worried about plagiarism concerns caused by similar AI outputs online. Drawbacks include imperfect accuracy if bad actors find ways around watermark insertion methods or convert marked files after completion using translation algorithms.

After weighing both sides, Dr. Vargas views SynthID as helpful yet incomplete unless paired with strong policies. We need clear disclosure rules and limited tool usage rights among authorized professors, librarians, editors, publishers, and government officials.

Read more artificial intelligence articles at ClichéMag.com

Images provided by Deposit Photos, BingAI, Adobe Stock, Unsplash, Pexels, Pixabay Freepik, & Creative Commons. Other images might be provided with permission by their respective copyright holders.